The Art of Leading Data Projects: 8 Proven Strategies for Excellence

Mastering Data Leadership in a Rapidly Evolving World

In today’s digital landscape, data is the new currency—and those who can harness its power will drive business transformation, innovation, and competitive advantage. But leading a successful data project is no small feat. It requires a blend of strategic foresight, technical expertise, and leadership acumen to ensure that data initiatives align with business goals, deliver measurable outcomes, and create long-term value.

So, how do the best data leaders drive success? Here are 8 proven strategies that will help you master the art of leading data projects with excellence.

1. Align Data Strategy with Business Goals

Why It Matters: A data project that does not serve a clear business purpose is a wasted investment. Data leaders must ensure their projects are strategically aligned with corporate objectives.

How to Execute:

✅ Define a Clear Roadmap – Outline how your data initiative supports key business goals (e.g., cost reduction, customer insights, operational efficiency).

✅ Prioritize Projects Based on Impact – Focus on high-value use cases that solve real problems, not just trends.

✅ Involve Business Leaders Early – Ensure buy-in from decision-makers by demonstrating how your data initiatives drive growth.

✅ Measure and Communicate ROI – Develop a framework to track impact using business-focused KPIs.

🚀 Pro Tip: Use OKRs (Objectives & Key Results) to tie data initiatives to measurable business outcomes.

2. Leverage Agile Execution

Why It Matters: Traditional waterfall project management is too rigid for the fast-moving world of data. Agile methodologies provide the flexibility needed to iterate, adapt, and continuously deliver value.

How to Execute:

✅ Adopt Agile, Scrum, or SAFe Methodologies – Break work into sprints, ensuring quick iterations and early feedback.

✅ Use Collaboration Tools – Platforms like Jira, Confluence, or Monday.com help track progress and optimize workflows.

✅ Implement Cross-Functional Teams – Bring together data engineers, analysts, and business users to eliminate silos.

✅ Emphasize Continuous Improvement – Run retrospectives to refine your approach based on past learnings.

🎯 Case Study: Spotify scaled its Agile framework across multiple teams, leading to faster innovation and better data-driven decision-making. (Click on the Case Study to read about it.)

3. Optimize Resources & Budgets

Why It Matters: Data projects often fail due to poor resource allocation or cost overruns. Managing budgets effectively ensures sustainable execution.

How to Execute:

✅ Assess In-House vs. External Expertise – Leverage internal talent where possible, but don’t hesitate to hire specialized consultants.

✅ Use Cloud Services Efficiently – Optimize costs by selecting pay-as-you-go models (e.g., AWS Lambda, Google BigQuery).

✅ Automate Repetitive Tasks – Leverage AI and DevOps tools to reduce manual workload and lower operational costs.

✅ Monitor Financial KPIs – Track spend vs. value creation through real-time budget dashboards.

💡 Did You Know? Companies that optimize their cloud spending can cut costs by 30-50% without sacrificing performance.

4. Ensure Compliance & Governance

Why It Matters: With growing concerns over data privacy and security, organizations must adopt strict compliance measures to avoid legal and reputational risks.

How to Execute:

✅ Implement GDPR & ISO 27001 Standards – Ensure all data-handling processes meet industry regulations.

✅ Enforce Role-Based Access Control (RBAC) – Restrict data access based on user roles to enhance security.

✅ Develop a Data Stewardship Program – Assign accountability for data integrity and ethical use.

✅ Use Compliance Monitoring Tools – Platforms like Collibra, OneTrust, or BigID automate compliance tracking.

⚠️ Fact: Non-compliance with GDPR can result in fines of up to €20 million or 4% of annual revenue—whichever is higher.

5. Monitor KPIs for Performance

Why It Matters: Without data-driven performance tracking, it’s impossible to know if your project is succeeding or failing.

How to Execute:

✅ Define Clear Data KPIs – Examples include data accuracy, system uptime, query response time, and adoption rate.

✅ Build Real-Time Dashboards – Use tools like Tableau, Power BI, or Looker for live monitoring.

✅ Tie KPIs to Business Impact – Measure how your data initiative improves revenue, customer experience, or operational efficiency.

✅ Conduct Regular Performance Reviews – Ensure continuous optimization by adjusting strategies based on insights.

📊 Example KPI: Increase data processing speed by 50% within 6 months to support real-time analytics.

6. Embrace AI & Cloud Technologies

Why It Matters: AI-driven analytics and cloud platforms offer scalability, speed, and cost efficiency, transforming how businesses manage data.

How to Execute:

✅ Leverage AI for Automation – Use machine learning models to enhance predictive analytics and decision-making.

✅ Adopt Scalable Cloud Solutions – Platforms like AWS, Azure, Snowflake, and Databricks ensure seamless data management.

✅ Integrate NLP & Computer Vision – Automate data extraction and analysis from unstructured sources.

✅ Deploy AI Chatbots for Data Queries – Enable users to interact with data using conversational AI.

🤖 Future Trend: By 2027, AI will handle 60% of enterprise data processing tasks autonomously.

👉 Check Out How My Team and I Built a Scalable AWS Web App from Scratch – A Game-Changing Project You Need to See!

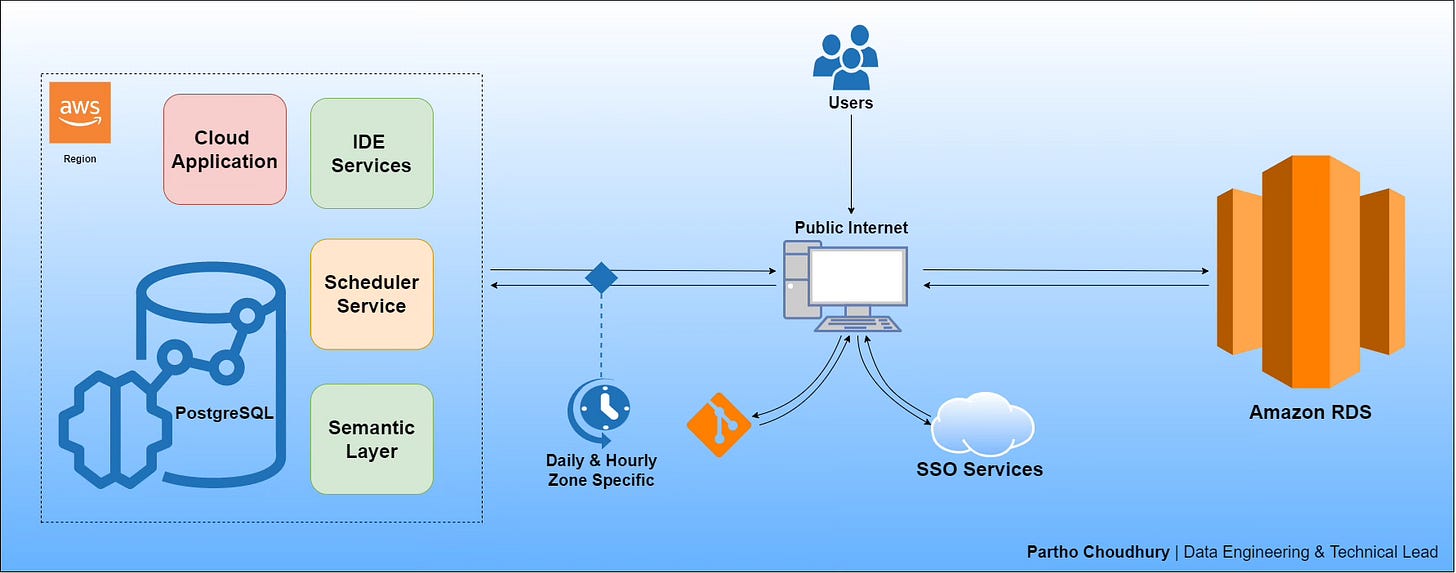

In today’s fast-paced digital landscape, cloud infrastructure offers immense scalability and performance benefits. One of my recent projects involved the creation of a highly scalable web application on AWS, designed to manage the operational and regulatory information of an agricultural company. The goal was to streamline data accessibility and enhance team collaboration by providing accurate, real-time regulatory information through a distributed relational database.

Key Project Insights:

• Problem to Solve: Operational test results, shipment documentation, and bag print layouts often had errors that were difficult to track, leading to inefficiencies and delays. The solution needed to track changes and maintain accuracy across different regions.

• Solution: By leveraging AWS Cloud and Terraform, we architected a 3-Tier Web Application that can scale seamlessly and ensure information accuracy across multiple stakeholders. This web application integrates a distributed relational database, ensuring data consistency and availability. It’s designed to meet both current and future needs, with a clear structure for role-based access to regulatory and transport documents.

• Optimization: The application automatically adjusts resources to handle increased traffic during peak times through auto-scaling, maximizing performance without overspending.

Project Breakdown:

1. Chapter 1: Planning & Overview – Introduction to building a 3-Tier Web Application in AWS for regulatory and operational data.

2. Chapter 2: Cloud Architecture – Detailed look at how we utilized AWS Cloud to power a classic 3-Tier Web Application, with emphasis on scalability and security.

3. Chapter 3: Cross-Region Deployment – A unique solution for AWS cross-region deployment, addressing the lack of support for multi-region deployment in AWS CodePipeline.

4. Chapter 4: CI/CD Pipeline Setup – Overview of setting up a robust CI/CD pipeline using AWS services to deploy the application across multiple environments (Development, Staging, and Production).

Benefits & Impact:

• Management of Change: Enabling teams to track and manage regulatory documentation, ensuring compliance with varying country-specific requirements.

• Performance Improvement: Significant reduction in non-conformities, processing time for regulatory document corrections, and issues related to bag printing.

Architectural Insights:

1. Security: We replaced traditional physical network appliances with software solutions to create a highly secure environment.

2. Dynamic Infrastructure: By treating hosts as ephemeral and dynamic, we designed the application to automatically scale, ensuring fault tolerance.

3. Data Flow & Optimization: Automated autoscaling and dynamic work rebalancing reduced costs and improved resource utilization.

This project embraces cloud technology’s full potential, offering a highly efficient and scalable solution for agricultural companies to manage regulatory data.

Explore Each Chapter for Full Details (Click on each topic to view the repository):

• Chapter 1: Planning & Overview

• Chapter 2: Cloud Architecture

• Chapter 3: Cross-Region Deployment

• Chapter 4: CI/CD Pipeline Setup

Through this approach, we’ve not only solved a critical business problem but also showcased the power of AWS Cloud and Terraform in transforming operational workflows.

7. Drive Adoption & Change Management

Why It Matters: A great data solution is useless if people don’t use it. Adoption is critical to long-term success.

How to Execute:

✅ Develop Data Literacy Training – Train employees on data tools, governance, and interpretation.

✅ Communicate Early & Often – Keep stakeholders engaged throughout the project lifecycle.

✅ Make Data Tools User-Friendly – Ensure intuitive UI/UX to encourage adoption.

✅ Show Quick Wins – Demonstrate early success stories to build momentum.

🎓 Example: Companies with strong data literacy programs are 3X more likely to outperform competitors.

8. Mitigate Risks Proactively

Why It Matters: Data projects come with inherent risks, from security breaches to system failures. Proactive risk management ensures stability.

How to Execute:

✅ Conduct Risk Assessments Early – Identify potential challenges before they become major issues.

✅ Implement Strong Cybersecurity Protocols – Encrypt data, use MFA, and monitor for threats.

✅ Develop Contingency Plans – Always have a backup strategy in case of failure.

✅ Use AI for Threat Detection – Leverage machine learning to predict and prevent security breaches.

🔥 Key Stat: 60% of small businesses close within 6 months of a cyberattack due to unmitigated risks.

Final Thoughts: The Future of Data Leadership

Leading a data-driven transformation is about more than just technology—it’s about strategy, execution, and leadership. By mastering these 8 proven strategies, organizations can ensure their data projects deliver real value, drive business impact, and future-proof their success.

🚀 Are you ready to lead the next big data revolution? Let’s make it happen.